Introduction

In my previous article, I covered some basic ways we could modify our Nginx configuration in order to achieve better performance. Let’s expand upon that today. I am using this as reference.

Compression

Internet could be cheap these days. But when you’re dealing with thousands of requests every second, the need for using the least bandwidth becomes very apparent.

Our browsers communicate various things to a web server in the form of request headers. One of them can be its ability to handle compressed responses. A client (that is, the browser) does this with the Accept-Encoding header. So a typical request may include headers with a parameter that looks something like:

Accept-Encoding: gzip, deflate

This means we can compress the resource (typically static files) on the server and send them to the client. Now, there are a few algorithms that we can use for compression; the most common one being gzip. The deflate refers to using zlib compression. However, all of this depends upon the client. We might not even get to use compression if the client doesn’t support it.

Since, whether we use compression or not varies, we’ll have to set a few headers which will be included in the server’s responses.

Suppose we want to use compression for all of our static files. Then we should

define a location block as follows:

location ~* .(css|js|jpe?g|png|svg)$ {

# the value of 'Accept-Encoding' may vary

add_header Vary Accept-Encoding;

# we need not log these requests

access_log off;

# tell the client that the resource

# can be cached in any way

add_header Cache-Control public;

# this resource expires after 1 month

expires 1M;

}As you have seen, headers are set with the add_header directive. I have also included some additional configuration with comments where required.

Now we need to enable gzip compression in the configuration. Let’s put this within the http context so that it is inherited by all of our servercontexts and location blocks.

So let’s have a look at our final configuration.

worker_processes auto;

events {

worker_connections 512;

}

http {

include mime.types;

client_body_buffer_size 10K;

client_max_body_size 8M;

client_header_buffer_size 1K;

client_body_timeout 12;

client_header_timeout 12;

keepalive_timeout 15;

send_timeout 10;

sendfile on;

tcp_nopush on;

gzip on;

gzip_comp_level 4;

gzip_types text/css text/javascript;

gzip_types image/png image/jpeg image/svg+xml;

server {

listen 80;

server_name openbsd.local;

root /var/www/html;

index index.html;

location / {

try_files $uri $uri/ =404;

}

location ~* \.(css|js|png|jpe?g|svg) {

add_header Vary Accept-Encoding;

access_log off;

add_header Cache-Control public;

expires 1M;

}

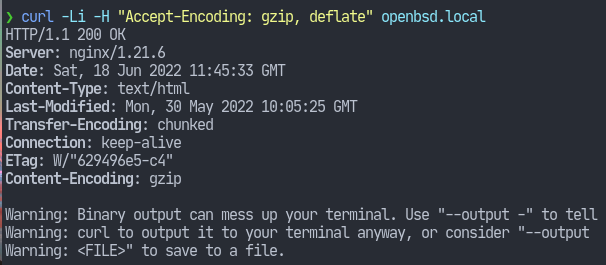

}curl comes as an excellent utility to test this out. We can supply the Accept-Encoding header using the -H flag.

And indeed, nginx send us the compressed response. This would be decompressed automatically by our client browsers.

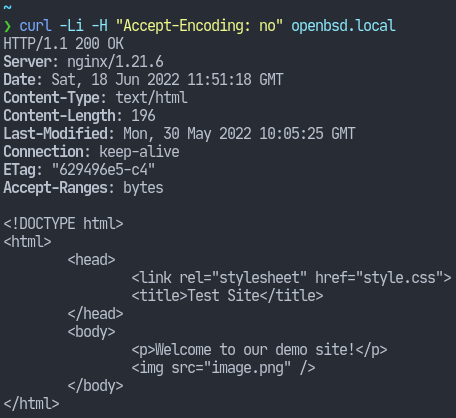

And if we send an invalid value in the header or perhaps none at all, then nginx just sends the uncompressed response.

So that’s it for today. Hope you learnt something useful and thank you for reading.