This is another Kubernetes-related notebook entry in which I will document the procedure for setting up a Kubernetes cluster using K3S in virtual machines created with Canonical’s Multipass. In addition, I will describe how to configure kubectl, the Kubernetes command-line cluster management tool to manage the K3S cluster from outside of the virtual machines in which the cluster will run.

Multipass can be run in Windows, Mac OS X and Linux so the procedure described in this article should apply to all the above mentioned environments though I have only tested it in Mac OS X.

Background

The astute reader will recall a recent article of mine in which I described how to run a Kubernetes cluster in Docker and may wonder why I now have moved on to K3S instead of Kind.

There are two reasons to this:

- K3S claims to be a production-ready.

Perhaps not Google-scale production ready, but enough for my needs I suspect. - K3S is more similar to a real Kubernetes cluster.

During the short time that I have been working with Kind, I have encountered two issues which made me have a look at K3S: - – Kind cluster cannot be restarted.

- – Kind does not support the LoadBalancer type.

Prerequisites

To prepare for K3S, I will install a couple of tools that I will need and then create the virtual machines in which K3S will run.

Tools

Before installing K3S, the following tools need to be installed on the computer that is to act as a host for the virtual machines:

- Multipass

Canonical’s virtual machine in which instances of Ubuntu can be run.

Instructions on how to install Multipass can be found here. - kubectl

As before, kubectl is the Kubernetes command-line cluster management tool.

Instructions on how to install kubectl can be found here.

Virtual Machines

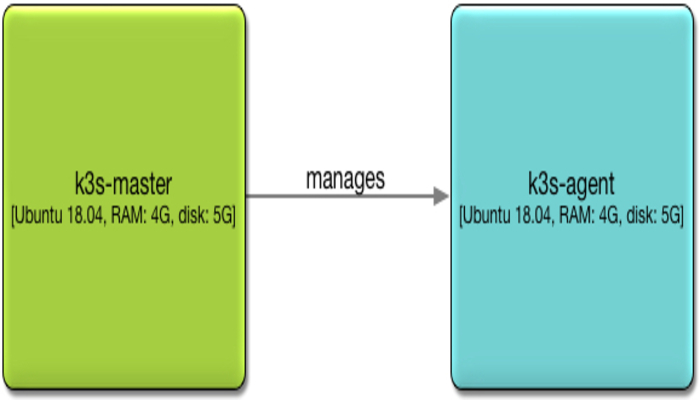

I will create a Kubernetes cluster that consists of two nodes; one master and one agent. For this I will need two virtual machines.

Having installed Multipass, open a terminal window:

- Create and start the master node virtual machine:

multipass launch --name k3s-master --mem 4G --disk 5G 18.04multipass launch --name k3s-agent --mem 4G --disk 5G 18.04- Verify the two virtual machines:

multipass listThe output should be similar to the following:

Name State IPv4 Image

k3s-agent Running 192.168.64.10 Ubuntu 18.04 LTS

k3s-master Running 192.168.64.7 Ubuntu 18.04 LTSNote the IP address of the k3s-master – it will be needed later when installing K3S on the agent node.

Install K3S

The following describe how to install K3S on the master and agent nodes.

Install K3S on Master Node

In this step we are going to install K3S on the master node and retrieve the master node token which we will later need to create a K3S node that is to be managed by the manager.

- Open a terminal window if needed.

- Open a shell on the k3s-master VM:

multipass shell k3s-master

curl -sfL https://get.k3s.io | sh -Output similar to the following should appear in the console:

[INFO] Finding latest release

[INFO] Using v1.17.4+k3s1 as release

[INFO] Downloading hash https://github.com/rancher/k3s/releases/download/v1.17.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/rancher/k3s/releases/download/v1.17.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

chcon: can't apply partial context to unlabeled file '/usr/local/bin/k3s'

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3sVerify the K3S installation:

k3s -version

so the output from the above command looks like this:

k3s version v1.17.4+k3s1 (3eee8ac3)Retrieve the master node token:

sudo cat /var/lib/rancher/k3s/server/node-tokenK103a5c4b22b4de5715dff39d58b1325c38e84217d40146c0f65249d439cab1a531::server:271a74dadde37a98053a984a8dc676f8Exit the k3s-master VM shell:

exitInstall K3S on Agent Node

With the IP address and the node token of the K3S master, I am now ready to install K3S on the agent node:

- Open a terminal window if needed.

- Open a shell on the k3s-agent VM:

multipass shell k3s-agent- Install the latest version of K3S for an agent node.

Replace the IP address of the k3s-master in the K3S_URL variable and the node token in the K3S_TOKEN variable:

curl -sfL https://get.k3s.io | K3S_URL="https://192.168.64.7:6443" K3S_TOKEN="K103a5c4b22b4de5715dff39d58b1325c38e84217d40146c0f65249d439cab1a531::server:271a74dadde37a98053a984a8dc676f8" sh -

Output similar to this should appear in the console:

[INFO] Finding latest release

[INFO] Using v1.17.4+k3s1 as release

[INFO] Downloading hash https://github.com/rancher/k3s/releases/download/v1.17.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/rancher/k3s/releases/download/v1.17.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

chcon: can't apply partial context to unlabeled file '/usr/local/bin/k3s'

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agentExit the k3s-agent VM shell:

exitVerify the Cluster

Before configuring kubectl in the Multipass VM host (or elsewhere, outside of the K3S virtual machines), I want to quickly verify that the K3S cluster is properly set up.

- Open a terminal window if needed.

- Open a shell on the k3s-master VM:

multipass shell k3s-masterList the nodes in the cluster:

sudo kubectl get nodes -o wideIn my case, the node list looks like this:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k3s-agent Ready <none> 5m31s v1.17.4+k3s1 192.168.64.10 <none> Ubuntu 18.04.4 LTS 4.15.0-91-generic containerd://1.3.3-k3s2

k3s-master Ready master 39m v1.17.4+k3s1 192.168.64.7 <none> Ubuntu 18.04.4 LTS 4.15.0-91-generic containerd://1.3.3-k3s2exitConfigure External kubectl

With the K3S cluster up and running, I will now configure kubectl I have installed in the VM host, which is my development computer, to be able to manage the K3S cluster.

Retrieve Master Node kubectl Configuration

In order to be able to configure an external kubectl management tool to manage the K3S cluster just created, I copy the kubectl configuration from the master node and later make a few modifications:

- Open a terminal window if needed.

- Open a shell on the k3s-master VM:

multipass shell k3s-master

- View the master node’s kubectl configuration:

sudo kubectl config view

In my case, it looks like this:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://127.0.0.1:6443

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: default

user:

password: ea3527757bd8c5c9ae8dbb00fef7595c

username: adminNote that your configuration file will not be identical to the above – the password will be different for example.

- Exit the k3s-master VM shell:

exit

Replace kubectl Configuration

I can now replace the kubectl configuration in the VM host with a modified version of the kubectl configuration from the master node:

- Open a terminal window if needed.

- Edit, or create if it does not exist, the local kubectl configuration:

vi ~/.kube/config

- Replace the contents, if any, with the kubectl configuration from the master node.

- Change the cluster IP address to the IP address of the k3s-master VM and configure to skip TLS verification.

The result will look like this, with lines 4 and 5 being modified:

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: https://192.168.64.7:6443

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: default

user:

password: ea3527757bd8c5c9ae8dbb00fef7595c

username: admin- Save the configuration and exit vi:

:wq - Verify the configuration:

kubectl get nodes

The same nodes as listed from inside the master node should be listed now as well, albeit in a more compact list:

NAME STATUS ROLES AGE VERSION

k3s-master Ready master 66m v1.17.4+k3s1

k3s-agent Ready <none> 32m v1.17.4+k3s1Restart a K3S Cluster

As in the introduction, one of the reasons for switching from Kind to K3S is the ability to be able to restart a K3S cluster. If I need to interrupt my Kubernetes-related work, I just shut down my computer, the one being the host of the K3S virtual machines. When I later restart the computer, all I have to do to restart the K3S cluster and restore it to its previous state is to restart the virtual machines of the cluster with the following two commands:

multipass start k3s-master

multipass start k3s-agent