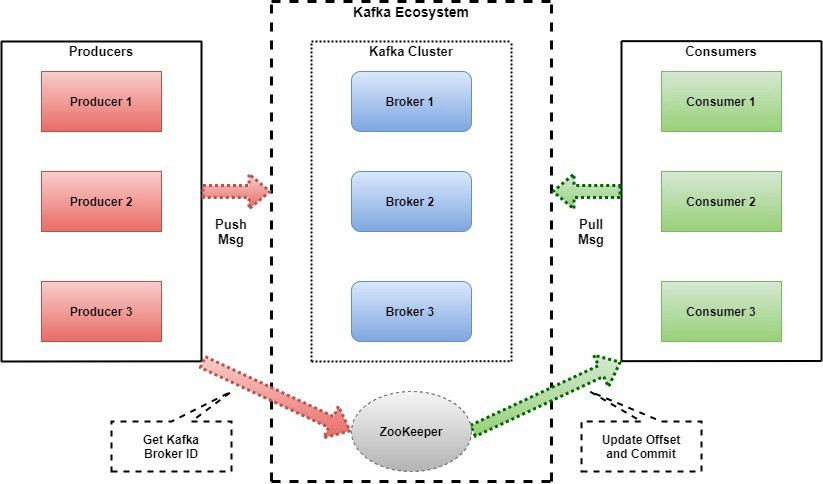

Kafka and Zookeeper cluster setup is something where multiple Kafka and Zookeeper are running on different VMs to provide HA. Before we get into things like what is Kafka Cluster? What is HA? We must learn about What is Kafka? Where it is used?

What is Kafka?

Kafka is an open-source platform. Kafka was originally developed by Linkedin and was later incubated as the Apache Project. It can process over 1 million messages per second.

Kafka Use Cases:

Some of the popular use cases of Kafka are:

- Messaging

- Website Activity Tracking.

- Metrics.

- Log Aggregation.

- Stream Processing.

- Event Sourcing.

- Commit Log.

What is Kafka cluster?

A Kafka cluster consists of one or more servers (Kafka brokers) running Kafka and Zookeeper. For production grade clusters, it is advisable to have minimum 3 nodes in Kafka cluster and 3 separate nodes for Zookeeper.

Installation Steps for Kafka Cluster:

We will be doing the set up for Kafka HA cluster which will consist of 3 broker nodes. These 3 brokers nodes will connect to Zookeeper service which will run 3 different nodes.

Prerequisites:

- 3 VMs for Zookeeper, with 2 cores, 4GB RAM, 50 GB boot disk and 100 GB additional disk.

- 3 VMs for Kafka, with 4 cores and 16GB RAM, 50 GB boot disk and 500 GB additional disk.

Step 1: Mount the additional volumes

Mount the additional disk created to store the Kafka and Zookeeper data in separate disk safely using the below command on all the 6 nodes (3 Kafka and 3 Zookeeper).

sudo mkfs.ext4 -m 0 -F -E lazy_itable_init=0,lazy_journal_init=0,discard /dev/sdb

sudo mkdir -p /data

sudo mount -o discard,defaults /dev/sdb /data

sudo chmod a+w /data

sudo cp /etc/fstab /etc/fstab.backup

echo UUID=`sudo blkid -s UUID -o value /dev/sdb` /data ext4 discard,defaults,nofail 0 2 | sudo tee -a /etc/fstabTo confirm:

lsblk

Step 2: Increase the ulimit

Increase the ulimit of the system on all the 6 nodes with the help of following commands:

echo "root soft nproc 1000000" | sudo tee -a /etc/security/limits.conf

echo "* soft nproc 1000000" | sudo tee -a /etc/security/limits.conf

echo "* hard nproc 1000000" | sudo tee -a /etc/security/limits.conf

echo "root hard nproc 1000000" | sudo tee -a /etc/security/limits.conf

echo "root hard nofile 1000000" | sudo tee -a /etc/security/limits.conf

echo "* hard nofile 1000000" | sudo tee -a /etc/security/limits.conf

echo "* soft nofile 1000000" | sudo tee -a /etc/security/limits.conf

echo "root soft nofile 1000000" | sudo tee -a /etc/security/limits.conf

echo "root hard memlock unlimited" | sudo tee -a /etc/security/limits.conf

echo "root soft memlock unlimited" | sudo tee -a /etc/security/limits.conf

echo "* hard memlock unlimited" | sudo tee -a /etc/security/limits.conf

echo "* soft memlock unlimited" | sudo tee -a /etc/security/limits.conf

echo "fs.file-max = 1000000" | sudo tee -a /etc/sysctl.conf

echo 'session required pam_limits.so' | sudo tee -a /etc/pam.d/suStep 3: Install Java

Kafka and Zookeeper need Java to run. Install Java on all the VMs using below command:

apt-get update -y

apt-get upgrade -y

apt install openjdk-8-jdk-headless -yStep 4: Download the Kafka Package:

Download the Kafka package using below command on all the 6 nodes:

wget https://archive.apache.org/dist/kafka/2.3.0/kafka_2.11-2.3.0.tgzMove the package to /opt and unzip it with the help of:

mv kafka_2.11-2.3.0.tgz /opt

tar -zxvf kafka_2.11-2.3.0.tgz Once we move the kafka folder to /opt, we need to rename the directory from kafka_2.11-2.3.0 to kafka using the following command:

mv kafka_2.11-2.3.0 kafkaStep 5: Install Zookeeper:

On all the 3 zookeeper nodes, go to /opt/kafka/config/zookeeper.properties and paste the following content after replacing the IP of zookeeper machines:

dataDir=/data/zookeeper

clientPort=2181

maxClientCnxns=128

initLimit=10

syncLimit=5

tickTime=6000

server.1=<zookeeper_1_IP>:2888:3888

server.2=<zookeeper_2_IP>:2888:3888

server.3=<zookeeper_3_IP>:2888:3888Each zookeeper needs a unique id file called myid at the path of dataDir to be present. Create myid file at /data/zookeeper with following content:

For zookeeper1:

1For zookeeper2 and zookeeper3 it will be 2 and 3 respectively.

Step 6: Create and start Zookeeper service.

Zookeeper will run as a service. To create a service in Ubuntu we need to go to /etc/systemd/system and create zookeeper.service file there. This file will contain the following content:

[Unit]

Description=Zookeeper

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

LimitNOFILE=65536

LimitNPROC=65536

LimitMEMLOCK=infinity

Restart=on-failure

RestartSec=5s

ExecStart=/opt/kafka/bin/zookeeper-server-start.sh /opt/kafka/config/zookeeper.properties

Environment="KAFKA_HEAP_OPTS=-Xmx3G -Xms3G -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/data/logs/mps -XX:+CrashOnOutOfMemoryError"

[Install]

WantedBy=multi-user.targetTo start the Zookeeper service execute the following commands on all 3 Zookeeper nodes:

systemctl daemon-reload

systemctl start zookeeperTo ensure that Zookeeper service starts automatically whenever your VM boots up we need to make it a default service. For that, execute the following command:

systemctl enable zookeeperStep 7: Install Kafka.

On all the 3 Kafka nodes, Go to /opt/kafka/config/server.properties and enter the following configuration for the Kafka broker after replacing the Kafka and Zookeeper IPs.

broker.id=1

listeners=PLAINTEXT://<kafka_ip_1>:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/data/kafka/

num.partitions=3

num.recovery.threads.per.data.dir=3

offsets.topic.replication.factor=2

transaction.state.log.replication.factor=2

transaction.state.log.min.isr=2

log.retention.hours=24

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

log.cleanup.policy=delete

zookeeper.connect=<zookeeper_ip_1>:2181,<zookeeper_ip_2>:2181,<zookeeper_ip_3>:2181

zookeeper.connection.timeout.ms=30000

group.initial.rebalance.delay.ms=0

default.replication.factor = 2

min.insync.replicas = 2

unclean.leader.election.enable=false

auto.create.topics.enable=falseThe value of broker.id will be a unique value for each broker.

Step 8: Create and start Kafka service.

Similar to Zookeeper, Kafka will also run as a service. To create a service in Ubuntu we need to go to /etc/systemd/system and create kafka.service file there. The file will contain the following content:

[Unit]

Description=Kafka

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

LimitNOFILE=65536

LimitNPROC=65536

LimitMEMLOCK=infinity

Restart=on-failure

RestartSec=5s

ExecStart=/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

Environment="KAFKA_HEAP_OPTS=-Xmx8G -Xms8G -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/data/logs/mps -XX:+CrashOnOutOfMemoryError"

[Install]

WantedBy=multi-user.targetTo start the Kafka service execute the following commands on all 3 Kafka nodes:

systemctl daemon-reload

systemctl start kafkaTo ensure that Kafka service starts automatically whenever our VM boots up we need to make it a default service. For that execute the following command:

systemctl enable kafkaStep 9: Check status of Zookeeper and Kafka

To check the status of zookeeper service execute the following command on zookeeper nodes:

systemctl status zookeeperFor confirming the status of Kafka service execute the following command on Kafka nodes:

systemctl status kafka

Step 10: Validate the set up.

Once the Kafka service is started on all the three Kafka nodes it should be able to register itself with the Zookeeper. To ensure that, connect to zookeeper shell using /opt/kafka/bin/zookeeper-shell.sh <zookeeper_ip>:2181 and run the following command:

ls /brokers/idsThe output of the above command should contain the broker ids of all the Kafka nodes.

With this your 3 node Kafka cluster is up and Running.

Cheers !!!