Introduction

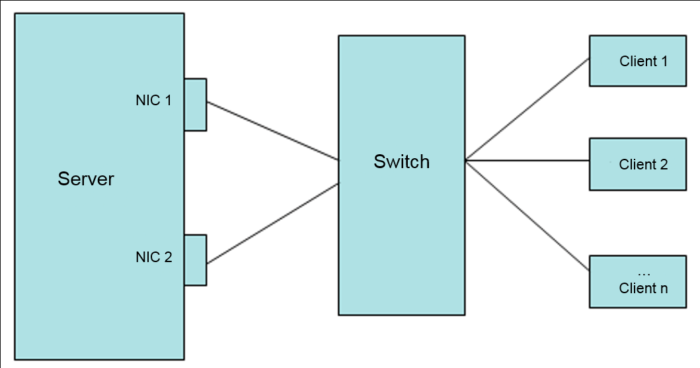

NIC teaming presents an interesting solution to redundancy and high availability in the server/workstation computing realms. With the ability to have multiple network interface cards, an administrator can become creative in how a particular server accessed or create a larger pipe for traffic to flow through to the particular server.

This guide will walk through teaming of two network interface cards on a Debian 11 system. we will be using the The ifenslave software to attach and detach NICs from a bonded device.

The first thing to do before any configurations, is to determine the type of bonding that the system actually needs to implemented. There are six bonding modes supported by the Linux kernel as of this writing. Some of these bond ‘modes‘ are simple to setup and others require special configurations on the switches in which the links connect.

Understanding the Bond Modes :

| Mode | Policy | How it works | Fault Tolerance | Load balancing |

| 0 | Round Robin | packets are sequentially transmitted/received through each interfaces one by one. | No | Yes |

| 1 | Active Backup | one NIC active while another NIC is asleep. If the active NIC goes down, another NIC becomes active. only supported in x86 environments. | Yes | No |

| 2 | XOR [exclusive OR] | In this mode the, the MAC address of the slave NIC is matched up against the incoming request’s MAC and once this connection is established same NIC is used to transmit/receive for the destination MAC. | Yes | Yes |

| 3 | Broadcast | All transmissions are sent on all slaves | Yes | No |

| 4 | Dynamic Link Aggregation | aggregated NICs act as one NIC which results in a higher throughput, but also provides failover in the case that a NIC fails. Dynamic Link Aggregation requires a switch that supports IEEE 802.3ad. | Yes | Yes |

| 5 | Transmit Load Balancing (TLB) | The outgoing traffic is distributed depending on the current load on each slave interface. Incoming traffic is received by the current slave. If the receiving slave fails, another slave takes over the MAC address of the failed slave. | Yes | Yes |

| 6 | Adaptive Load Balancing (ALB) | Unlike Dynamic Link Aggregation, Adaptive Load Balancing does not require any particular switch configuration. Adaptive Load Balancing is only supported in x86 environments. The receiving packets are load balanced through ARP negotiation. | Yes | Yes |

1- Update and upgrade

Log in root and type the update and upgrade commands:

apt updateapt upgrade

So in this case, we will be using Debian 11.

2- Install ifenslave package

The second step to this process is to obtain the proper software from the repositories. The software for Debian is ifenslave and can_be installed with apt

3- Load the kernel module

Once the software installed, the kernel will need to_be told to load the bonding module both for this current installation as well as on future reboots.

4- Create the bonded interface

Now that the kernel made aware of the necessary modules for NIC bonding, it is time to create the actual bonded interface. This is done through the interfaces file which is located at ‘/etc/network/interfaces‘

This file contains the network interface settings for all of the network devices the system has connected. This example has two network cards (eth0 and eth1).

In this file, the appropriate bond interface to enslave the two physical network cards into one logical interface shouldbe created .

The ‘bond-mode 1‘ is what is used to determine which bond mode is used by this particular bonded interface. In this instance bond-mode 1 indicates that this bond is an active-backup setup with the option ‘bond-primary‘ indicating the primary interface for the bond to use. ‘slaves eth0 eth1‘ states which physical interfaces are part of this particular bonded interface.

In addition, the next couple of lines are important for determining when the bond should switch from the primary interface to one of the slave interfaces in the event of a link failure. Miimon is one of the options available for monitoring the status of bond links with the other option being the usage of arp requests.

This guide will use miimon. ‘bond-miimon 100‘ tells the kernel to inspect the link every 100 ms. ‘bond-downdelay 400‘ means that the system will wait 400 ms before concluding that the currently active interface is indeed down.

The ‘bond-updelay 800‘ is used to tell the system to wait on using the new active interface until 800 ms after the link is brought up. most importantly, updelay and downdelay, both of these values must be multiples of the miimon value otherwise the system will round down.

5- Bring up the bonded interface

- ifdown eth0 eth1 – This will bring both network interfaces down.

- ifup bond0 – This will tell the system to bring the bond0 interface on-line and subsequently also bring up eth0 and eth1 as slaves to the bond0 interfaces.

As long as all goes according to plan the system should bring eth0 and eth1 down and then bring up bond0. by bringing up bond0, eth0 and eth1 will_be reactivated and made for being_ members of the active-backup NIC team created in the interfaces file earlier.

6- Check the bonded interface status

7- Testing the Bond Configuration

We will disconnect the eth0 interface to see what happens

Originally the bond was using eth0 as the primary interface but when the network cable disconnected, the bond had to determine the link was indeed down, then wait the configured 400 ms to completely disable the interface, and then bring one of the other slave interfaces up to handle traffic ;

This output shows that eth0 has had a link failure and the bonding module corrected the problem by bringing the eth1 slaved interface on-line to continue handling the traffic for the bond.

At this point the bond is functioning in an active-backup state as configured! While this particular guide only went through active-backup teaming, the other methods are very simple to configure as well but will require different parameters depending on which bonding method chosen. Remember though that of the six bond options available, bond mode 4 will require special configuration on the switches that the particular system connected.

Did exactly as in this document. I am trying to set up bonding for a major project that is going into production in a week. But my bonding doesn’t work after boot up . If to a systemctl restart networking It works. But I need it work with out external interference. Could you help.